A Fully connected layer is the actual component that does the discriminative learning in a Deep Neural Network. It’s a simple Multi layer perceptron that can learn weights that can identify an object class. You can read about MLP in any ML text book.

If you take a simple 1-2 layer neural network the weights are learned so that an input can be discrimanted into various classes. Now this network works great when you have structured data (like housing prices) where you know what kind of information will be coming in an input channel/column.

An image is a group of pixels (say 100x100x3) which can have a value between 0 and 255 denoting the intensity. So if you stretch it out into one row you will have input as 30000 columns.

When people tried to use 1-2 layer Neural network for computer vision they ran into issues like the actual object could be tilted, distorted, scaled or repositioned – which means a particular kind of information could come in any of the input features/columns or group of features - together. The Neurons in the simple network are tied to one fixed area of the image and become activated only when the object is in that portion. Clearly this did not work well.

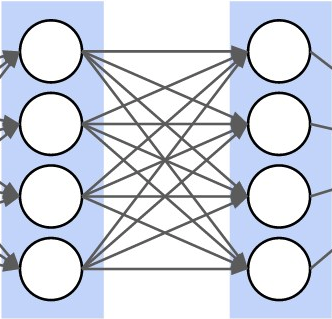

Consider a Deep Neural network which has X-Y-Z input layers and a last FC Layer (with say 100 inputs). X, Y and Z could be Pooling, Convolution etc.

All the earlier layers X,Y,Z in a Deep Neural network work together to come up with a scale and position invariant representation of the input image such that whenever a particular object is found anywhere in the input image, a fixed column (or some fixed columns) in the FC Layer becomes activated. Its like if you see a Chair anywhere in the image activate column 10, 11, 80 and 99. (Disclaimer: Oversimplified)

Now due to this magic, the information coming in input columns to the last FC layer are fixed (like in Housing Prices example) and a Discriminative learning is easily possible.

In fact you can use a pre trained deep neural network, get rid of the last fully connected layer and add a new fully connected layer that can learn to discriminate your own dataset. This is called Transfer Learning (You must have heard about Inception model).