Artificial Intelligence (AI) is the buzzword of this decade. Google, Facebook, Twitter and IBM are acquiring companies and people as fast as they can. Computer Vision is one of the core focus areas. Vision is perhaps one of the most difficult problems to solve in AI, and as you might have guessed, it is currently unsolved.

Neural Networks, specifically Convolutional Neural Networks (CNN) have become very popular. Almost everyone (except a few companies like Vicarious) is using CNN's for Image recognition. From last few years, Stanford hosts the “ImageNet” challenge for visual recognition. In the last 4 years, CNN’s have been winning the competitions, getting deeper every year. (Deep - meaning more number of layers in a Neural network). The most recent one had 160+ layers and learned 150 Million parameters.

I belong to that group of people who believe that CNN’s may not be how human vision works, although they are evolving towards it. CNN’s are winning challenges and are the state of the art. They are pretty good at what they do. They are amazing.

But then, there are several capabilities of human vision that we need to consider. This post lists some of the interesting facts about vision that can help us in putting together a path towards solving the true vision problem.

Our vision is scale invariant

When we see things, we can recognize them even if they are smaller or larger than how we saw them earlier. Sometimes we realize (and acknowledge) that they are different and sometimes we just don’t care. My 2 year old son recognizes a “Bus” no mater what size it is. He does say “big” bus and “small” bus though. However, when we are looking at pictures of friends, who are represented within a few inches, we appreciate the picture seamlessly - ignoring the scaling. On the other hand, we always try to get bigger and bigger TV screens.

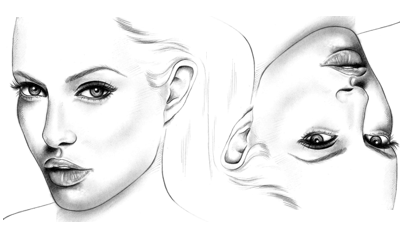

Rotation confuses us, sometimes

When we see a rotated or an upside down image of a face, we have a tough time recognizing it. Unknowingly, we tilt our heads and very soon it makes sense. Apparently, the more you rotate the picture, the more difficult it becomes (although most people would recognize faces in a blink even if they are rotated over 135 degrees). An interesting fact here is that some faces that look “pretty” in correct orientations may look “weird” when upside down.

Relative Dimensions may not matter much

When we see caricatures, the relative dimensions of face and body are mismatched. While we find them funny, we are still able to recognize them and the story behind them. Part of it could be that our brain recognize people due to their faces and if the face is good enough, a smaller body does not matter.

Our observations are Sparse

Every time we look at a painting or a video, we learn something new about them. Which means, at each gaze our brain picks up only some areas of the image. “Saccade”, an interesting term used by brain scientists, is a phenomenon when we are randomly (at least the first time) moving our focus around to get what we can pick up, store and evaluate. This idea is reinforced by the fact that when we try to recall what we saw, we are not be able to give complete details. In fact, the completeness of our description is proportional to the amount of time we spent on seeing something.

We notice what is Interesting

Recall your last bus ride. While you might have been awake the whole time, looking out of the window, you will not be able to recall every moment, person or objects you saw. However, if it had been something interesting like a Kangaroo or an accident, you would remember it. Some scientists claim that our brain stores everything it sees just does not know how to recall it (and some believe hypnotism can give answers).

We can selectively ignore areas

When I see an 8-story building with windows, I can stare at it for hours and create imaginary formations like a ladder, a camera film or something as simple as an 8 or a T. Our brain selectively chooses not to see some areas and create something new out of what it sees. Sometime these imaginary structures leave a pretty good imprint. So often we would say, “it’s next to the building that looks like an H”.

The details of what you see decreases logarithmically, radially

When you focus on an object, the image of the object looks very detailed. It is said that our vision is a 130 Mega pixel camera. However, try this experiment. Stare at an object at your desk, focused. Now try to think (not see) the level of details for anything that is around. You will notice while you can see there is a book on your desk, you can’t read its label (Although, The color and your memory will immediately tell you what book it is). Scientists say that this is because the concentration light receptors in your eye decreases logarithmically as you move away from the center. While you are at it, you will realize that the color perception is better at the focus and reduces as you move away, again because you have more color receptors at the center.

Optical Illusions

So much has been written about Illusions that I don’t need to emphasize more. They key point is, what we see is not what we perceive. Our brain plays tricks and hacks.

Perfect Image segmentation

Image segmentation is the part of image processing wherein you would divide the scene into its constituent parts like a chair, table etc. One of the most difficult problems (often called “matting” when you are dividing between foreground and background) in computer vision, segmentation is something that our brain does really well. Even when you are standing in front of a blue wall wearing a blue shirt, our brain can differentiate between them. Sometimes the brain can’t - the green screens that movie houses use to fill in content later, but more often that not it can segment really well.

Smart combinations of parts

Segmentation leaves behind smaller areas that need to be combined to create full objects. For example, a segmentation algorithm would identify your blue shirt, khaki pants, black shoes, skin, hair etc. - all with different colors. However, the brain intelligently combines them to create a full person, carefully ignoring the blue wall, leaving behind any other clutter around. Amazing isn’t it.

Misplaced constituents complicate the problem

If you take a face and move the eyes, nose mouth around you will be surprised to see that you can no longer identify a person. Could it be that out brain predicts what it should find N pixels down and M pixels right and when it’s not found, the brain assumes this is something new.

Partly Deformed/hidden images can still be identified

An interesting paradox to last point is that if information is partially hidden and whatever you see is not deformed, you can still identify the object. For example, you can identify a person by just seeing the eyes. You may not, however, identify people well with their hands, feet, ears etc. This could possibly be attributed to the fact that a majority of observation time is focused on the face of the person.

Colors are adjectives

We can identify things in black & white, color, and in different light intensities. You can identify any car if you have seen just a few, no matter what color it is. The car is a noun. The color of the car is an adjective. However, colors do help us in narrowing down the possibilities. The easiest way to distinguish between an orange and an apple is that oranges are orange in color. Now you might argue that what about the shape of Orange. But think about it - how do you identify a crushed orange or orange juice.

Verbs Matter

Some people believe that we recognize objects not just by their shapes but also by what you could possibly do to them or do with them i.e. associated verbs. For example, you would have heard people say "it looked like some kind of button” when they are unable to recognize the shape but identify it as a button when they realize it is something that cold be used to switch something on. This fact is further enhanced by the fact that when you see some new type of car - with 3 wheels, engines in the back, no doors, butterfly doors etc. you still recognize it as a car and not as a house where people live (Unless its a recreational vehicle of course).

Shapes are misleading

While you would want to associate shapes with certain objects, an algorithm that uses shapes to distinguish objects will fail if seen from a different angle, perspective or behind another object (occlusion). You can recognize an apple, an apple cut in 4 pieces (simple as well as in some artistic format) as well as a half eaten apple. A dress with tiger skin print immediately strikes you as owing some similarity to tigers, although it’s nowhere shaped like a lion.

Gradients tell a story

Sometimes you can create an entire effect by a clever use of shading or gradients. You can make objects appear in 3 dimension, stand out even when in the same colored background. An interesting scenario is that if you are trying to draw a ball, by not coloring a particular portion you can make it look like a curve (or shine). The perceived effect of a group of pixel can be determined not by the pixels but the pixels around them. Fascinating.

Our brain can (re)create images – sparsely

Think of an elephant. I am 99% sure you would have envisioned how an elephant looks like. Now evaluate how much detail you imagined about them. Think more. Does the depth of detail improve? This again reinforces the fact that our brain things sparsely, optimized for least energy usage. Maybe your unconscious mind tells your “artistic side” that “Hey, for now an elephant is just these 20 concepts. Lets see where the conversation is going - we will expand as needed".

Drawing is difficult

Well you know everything about apples and oranges. You might know everything about how a chair looks like. Well, Try to draw one and lets see if you can get the dimensions, perspective and colors right. If you are a painter/artist - never mind. However most people can’t draw. There is a debate on whether this is because our brain does not store enough information about a chair (observation lag) or it is due to that fact that our motor skills (the system which drives movement and coordination of our hands, fingers etc.) are not trained well to convert the concept “chair” into a 2D/3D drawing.

Drawing is a script

If I ask you to draw a picture of a man 10 times, you will follow the exact same routine and draw a stickman, exactly with same steps (the details like size of the circle or arms may vary). Some people would draw the torso as a single line while most people would draw arms and legs as single lines. (Now which CNN can identify this to be a man? My 6-year-old daughter can). It could be that the brain has a minimalistic concept of a man that you draw to take the discussion further. If I had asked you to draw a man wearing a shirt, your script would be different. I don’t thing these scripts are stored as a single object but picked and sequenced at runtime using smaller scripts.

Imaginary Vision can fill in parts

I showed a keyboard box to my 2-year-old son and told him this looks like a bus. He was convinced and busy for next 2 hours. It did not have wheels, windows etc. Other than the fact that it was rectangular, it had no resemblance to a Bus. But, with his imagination, he was able to reconstruct a bus in his mind. When our brain looks at something, a lot of information is backfilled leading to imaginations, illusions and misinterpretations.

In Memory/Imagination, we see in 3rd person

If I tell you to imagine yourself driving a car, you would not “see” just the dashboard. Instead you would (an example) “see” as if you were sitting in the next seat and you would see yourself behind the wheels. When you recall an interview you actually see the scene of the room with yourself sitting and talking. Interestingly that is not what our eyes originally saw. Our brain recreated it.

Newborns stare a lot

If you see newborn babies, in the first few months their response to all crazy things we do is pretty minimal. However they would stare at your face, or toys for a really long time. Could it be that their brain is making some low level representation of concepts that will evolve into a near perfect image recognition system.

We “See” dreams

Well again a very interesting research area. We always “see” a dream. Why is their vision involved when your eyes are closed? People talk, kick and scream when seeing a dream. It seems real, although most of my dreams do not obey laws of physics. This means, while eyes are a source of sensory input, the real vision is happening inside the brain. Which further means that if your brain would be trained to imagine shapes by touching them, very soon you could be seeing with your touch.

We adapt/unlearn interpretations in a snap

We could have a wrong interpretation of what an object is for years. When someone corrects us we update our understanding right away. The ability to unlearn is amazing in computer vision. Quite often, the person correcting us will show why, for example, a fish is a Snook or not. However, if you compare it to something that involves a motor skill, for example learning a new baseball shot, it takes time and practice to correct it. Adaption and organization of mind is an interesting research area.

Our Brain ignores the shadow

When you see a person almost always there is an associated shadow. However, our brain does not associate this pixel pattern as part of our body. In fact if it’s sunny and you do not see a shadow, you would not even notice it. Why does our brain ignore the shadow? Is it because it has a logical explanation to it and unless the context needs it, it’s useless to include it in interpretation.

A true vision solution should, in my opinion, be something that picks up sensory input, identifies the context, prepares list of possibilities and starts predicting. If prediction is correct, it should match with what you see next. The repository of possibilities should get updated with experience.

The following work has inspired a lot of my observations:

- The Book Society of Mind by Prof Marvin Miskey, Co-founder MIT AI Labs - https://en.wikipedia.org/wiki/Marvin_Minsky

- The On Intelligence book by Jeff Hawkings, Founder Numenta - http://numenta.com/

- The Convolutional Neural network course CS231 by Stanford University - http://cs231n.stanford.edu/

- Videos and articles by Prof Richard Radke - https://www.ecse.rpi.edu/~rjradke/

- Articles published by the Vicarious team - http://www.vicarious.com/

- The book How to create a mind by Ray Kurtzwell - http://www.kurzweilai.net/

- Practopoisis by Prof Danko Nikolic - http://www.danko-nikolic.com/practopoiesis/

I think this list will keep on growing as I remember more stuff. Suggestions and Comments are welcome.